- Amdocs and Nvidia are building AI agents that reflect brand identity and adapt to customer needs

- Consumers prefer empathetic, female-presenting AI agents with customizable traits

- AI trials show major gains in efficiency and satisfaction, but risks like hallucination remain

Amdocs is working on "personality engineering," which sounds fantastic. I'm sure you can think of annoying co-workers, family members and neighbors who can do with some personality engineering.

Alas, personality engineering isn't about making people less abrasive —it's the phrase Amdocs uses to describe tuning public-facing telco AI, such as customer service avatars, to ensure they effectively represent the brand.

AI customer service agents (or what the kids call "clankers" these days, thanks to a viral Star Wars-themed meme trending on the socials) can use all the help they can get to improve their bad reputation.

"Your agent is your brand representative, just like a human agent in the call center would be," Hillel Geiger, Amdocs global VP of corporate marketing, said. AI agents need to be configured to represent the organization's brand guidelines, language, visual identity and values, Geiger said during a presentation at June's DTW Ignite Forum in Copenhagen.

AI agents also need to take into account the context of a conversation. "If I'm calling to upgrade my plan or to buy another subscription for my younger son, it's a very different conversation than if I'm calling to complain about how high my bill is, or about the quality of service," Geiger said.

And AI agents need to take into account who they're speaking with. "A conversation with my 80-year-old Mom would be different than with my 18-year-old daughters," Geiger said.

"We take brand, context and the customer and from all those, we create a conversation. This is where an exciting domain comes into play in Amdocs. We are calling it 'personality engineering,'" Geiger said.

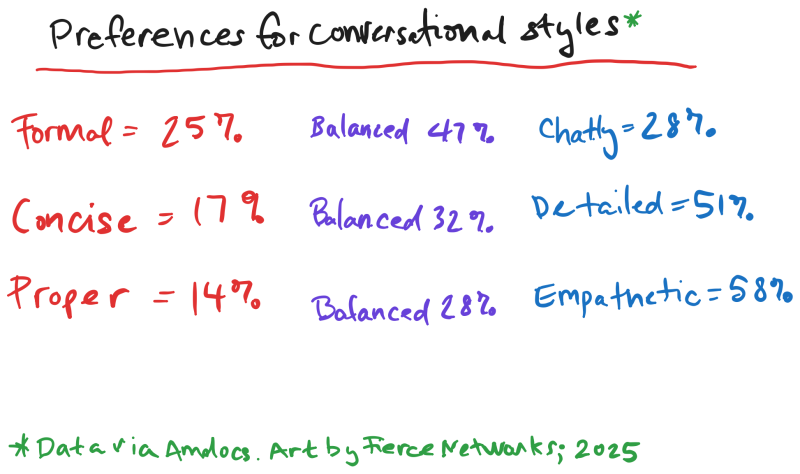

How do consumers want to relate to AI agents?

To find out how consumers prefer to relate to AI agents, Amdocs did a comprehensive study, speaking with 7,000 respondents in 14 markets, or 500 in each market, Geiger said. The company also spoke with 130 executives from communication service providers and ran focus groups. Amdocs asked about customers' preferred gender, age and tone when dealing with AI agents.

A plurality of respondents said they prefer to speak with an AI agent that presents as female rather than male. Some 45% of respondents said they prefer a female-presenting agent, 25% male and 30% had no preference, Geiger said.

Those numbers change when looking at female respondents alone. Some 67% of female respondents said they prefer their AI agents to present as female, Geiger noted.

As for the apparent age of the AI agent, Amdocs found no difference in preference from respondents of different ages. Some 39% had no preference at all, 35% preferred agents that seemed to be their own age, 14% preferred agents that seemed younger, and 12% preferred agents that seemed older.

More than half of respondents preferred an "empathetic" tone in their AI agents (58%), a finding that Geiger said he found "quite astounding."

"People know that they're conversing with a machine, with a piece of software. They're trying to humanize it. They want to feel empathy even though they know they're speaking with a software agent. I find that really puzzling," Geiger said.

Chris Penrose, Nvidia global VP of business development, said these results align with his own observations. "Even with people interacting with agents, they tend to use 'please' and 'thank you' quite a bit," he said.

Penrose presented jointly with Geiger at the DTW Ignite conference; the two companies have been collaborating on AI agents for two years.

To help optimize their experience, customers can choose the gender, age and tone of voice of the agent they interact with. "You can create endless permutations of these agents," Geiger said.

Guardrails are also important because: "How do you ensure that your agent is answering questions the way you want them to answer, and not going off and talking about politics," Penrose said.

In proof-of-concept trials, AI agents reduced the average handling times for calls "in an incredible way," Geiger said, while first-call resolution rises, Geiger said.

Amdocs claimed success in a proof-of-concept (PoC) trial of using GenAI for customer service for a "very large" North American operator, according to a June article by the company. The trial reduced call handling time by 63% and improved first-call resolution by 50%, which led to a 50% improvement in Net Promoter Score (NPS), in a PoC with a large North American operator.

amAIzing collaboration

Nvidia and Amdocs are collaborating on using the Amdocs amAIz platform to develop specialized agents for telcos, including agents for care (such as helping customers with billing and tech support), sales, marketing, network optimization and more. "Within every one of those agents, what we are doing is creating an expert," Penrose said. The companies are building those experts based on Amdocs' knowledge of telco operations.

The companies are also working on orchestrating agents. "You may start with one intent and pivot to another intent," Penrose said. A call that starts with an inquiry about billing or network outage might turn into a sales call. The result is like having a boardroom table with experts in marketing, sales and finance collaborating on decisions. "The best decisions are going to come when you can get all these different domains and disciplines to come together to offer the best solutions and outcomes," Penrose said.

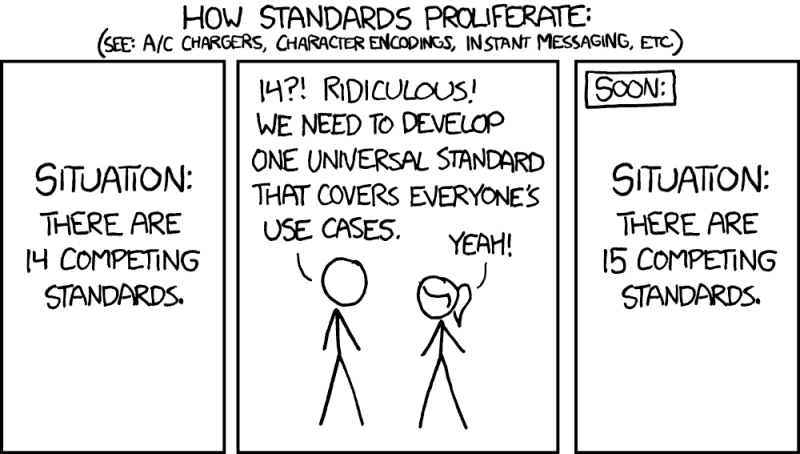

Standardizing orchestration between those pesky clankers

Several standards are emerging to permit orchestration between AI agents. Cisco donated its AGNTCY foundation for AI agent interoperability to the Linux Foundation late last month. Other standards include the Agent2Agent (A2A) protocol, also recently contributed to the Linux Foundation, and Anthropic's Model Context Protocol (MCP).

The proliferation of standards calls to mind this classic cartoon:

Nvidia's Riva platform enables building customizable AI voice agents with real-time multilingual speech recognition, transcription and translation, Penrose said. And Nvidia NeMo optimizes performance and scale.

A whole new ball game: LTMs

Telco AI featured at Nvidia's annual GTC developer conference in March, including news that the provider is working with partners on building large language models specialized for telcos: large telco models (LTMs).

LTMs can reconfigure networks in minutes, where these operations formerly took days, Nvidia SVP Ronnie Vasishta said. He showed a demo of two agentic AIs communicating in a chat window to reconfigure a wireless network to serve a baseball stadium during a big game; the AIs working together were able to achieve a 30% simulated performance and throughput improvement on game day.

Humanlike AI avatars could replace chatbots and even point-and-click interfaces for human interaction, according to Alan Dennis, professor at the Kelley School of Business at Indiana University, speaking at a panel at the Nvidia GDC 2025 conference in March.

These avatars, which Dennis called "digital humans," provide higher customer satisfaction and increased likelihood of purchases compared with traditional interfaces. Companies such as AT&T, Amdocs and ServiceNow are leveraging AI to automated network operations and enhance customer service, and representatives of those companies spoke at the panel alongside Dennis.

In a June whitepaper, Amdocs introduced a framework for telco-verticalized AI agents, in collaboration with Amazon Web Services (AWS) and Nvidia. These agents must have a formal representation of domain knowledge, which Amdocs termed "ontology," as well as autonomy, digital twins of the physical network, brand engineering, and trust.

And Cisco rolled out AI Canvas, a generative AI interface for managing infrastructure, at its Cisco Live conference in June.

Fierce Network's take

Amdocs and Nvidia are making a bold play that could transform telco customer relations. But the test will be whether they can scale deployments beyond pilots and demonstrate consistent improvements in both operational efficiency and customer satisfaction.

A major hurdle will be whether consumers even want chatbots to look and act like human beings — or, if the clankers meme is any indication, no matter what Amdocs' survey of 7,000 people says, consumers will hate the idea.

On a personal note, I don't want generative AI to show personality. I use ChatGPT several times a day, every day. I used it to research this article. When I first started using ChatGPT, I experimented with giving it a personality. I gave it a nickname, Roscoe, and instructed it to address me as "boss." However, I found the interactions exquisitely uncomfortable, and that experiment lasted 15 minutes.

Currently, my customization prompt for ChatGPT includes the admonitions to keep responses short, neutral, straightforward, professional, clear and concise. And I use all those words to make sure ChatGPT gets the point.

My desire to keep ChatGPT machine-like is enhanced when I read stories about people driven off the deep end in their relationship with their imaginary ChatGPT friends. Research shows that attachment to AI can alienate people from their friends and family, among other ill effects.

And yet, studies show most people respond better to clankers — I mean, AI agents — that exhibit personality than to purely machine-like agents. Research from as far back as 1999 shows people respond better to agents that mirror their own personalities. A 2025 study shows users in retail environments are more likely to trust AI chatbots with customizable avatars and voice cues.

Also note Amdocs's claims about its trial, which we mentioned earlier, showing GenAI reduced call handling time and improved first-call resolution drastically, vastly improving Net Promoter Score (NPS) for a large North American operator.stem

Hallucination dangers still lurk

Potential problems stem from the potential for hallucinations, where AI just gets things wrong. This has already emerged as a problem for companies deploying AI for customer service.

In one instance, Air Canada was ordered to pay compensation to a customer after a chatbot told that customer he was eligible for a refund for a bereavement fare after traveling for the funeral of a family member. And McDonald's removed AI ordering technology from 100 restaurants in the U.S. after errors resulted in viral videos showing bizarre misinterpreted orders, including ice cream topped with bacon and butter and hundreds of dollars worth of chicken nuggets.

These sorts of interactions are particularly dangerous in emotionally charged situations, such as customer complaints, and can harm customer trust and expose a company to reputational damage.

However, hallucinations can be controlled by measures including grounding models in real data using retrieval-augmented generation (RAG), tied to a company's knowledge bases, CRM and internal documents; providing context and metadata, such as the work Amdocs and Nvidia are doing; and keeping humans in the loop, trained to override problems as they come up.

The main test now is execution: Whether Amdocs and Nvidia can roll the technology out broadly, face down competition from hyperscaler-backed telco offerings and maintain trusted, secure behavior at scale.